The AI world was rocked this week when Berkeley researchers announced they’d replicated the core capabilities of DeepSeek’s groundbreaking R1-Zero AI model for just $30—less than the price of a fancy dinner. This jaw-dropping feat, led by PhD candidate Jiayi Pan, challenges everything we thought we knew about the cost and scalability of advanced AI systems. Here’s why this $30 experiment could upend the trillion-dollar AI industry.

The $30 Breakthrough: Smaller, Smarter, Cheaper

The UC Berkeley team trained a 3-billion-parameter language model using reinforcement learning on the Countdown game, a math puzzle where players combine numbers to hit a target value Source. Key stats:

- Cost: $30 total (vs. DeepSeek’s claimed $5M training cost and OpenAI’s $15M+ GPT-4 development)

- Efficiency: Output at $0.55 per million tokens (27x cheaper than OpenAI’s o1 API) Source

- Scale: Tiny compared to DeepSeek-R1’s 671B parameters, yet it achieved self-verification and iterative problem-solving Source.

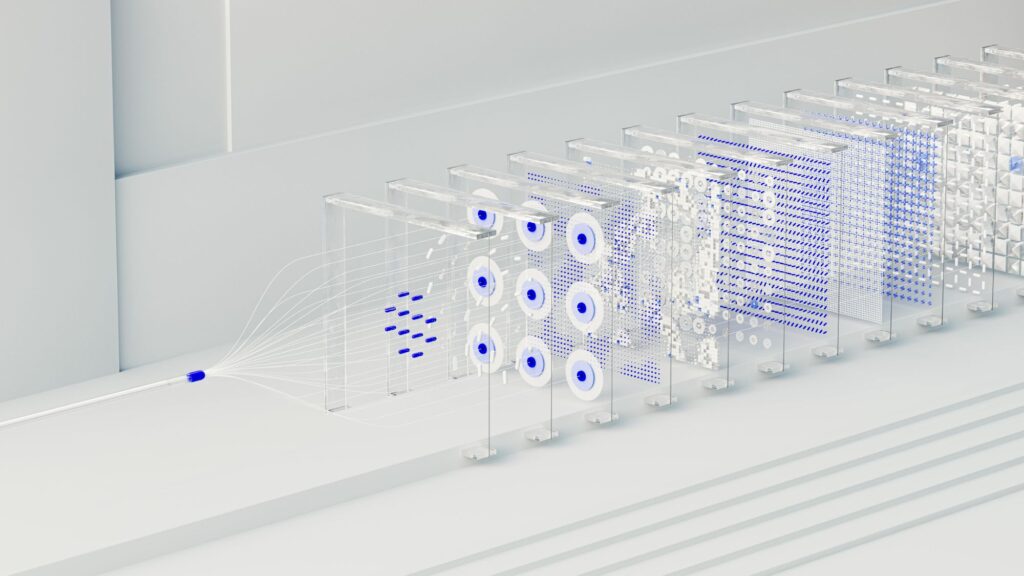

The AI started with random guesses but learned to refine answers through trial and error—a process mimicking human reasoning. At 1.5B parameters, it began revising strategies; by 3B, it solved problems efficiently Source.

The Countdown Game: AI’s New Training Ground

The secret sauce? A British game show-inspired challenge. Researchers used Countdown’s arithmetic puzzles to teach the AI iterative reasoning:

- Start with random outputs

- Verify solutions against ground truths

- Optimize strategies through reinforcement learning Source.

Pan’s team found that smaller models (500M parameters) struggled, but scaling to 3B–7B unlocked advanced skills—proving bigger isn’t always better Source.

Cost Wars: DeepSeek vs. OpenAI vs. Berkeley

The $30 experiment casts doubt on sky-high AI development costs:

| Model | Training Cost | Tokens/Million | Parameters |

|---|---|---|---|

| Berkeley TinyZero | $30 | $0.55 | 3B |

| DeepSeek-R1 | $5M* | $0.55 | 671B |

| OpenAI o1 | $78M+ | $15 | ~1.8T |

*Critics like AI researcher Nathan Lambert argue DeepSeek’s real annual costs likely exceed $500M–$1B when factoring in staff and infrastructure Source. Meanwhile, AI training costs have ballooned 4,300% since 2020—a trend Berkeley’s work could reverse Source.

Implications: A New Era of Accessible AI?

- Democratization: TinyZero’s GitHub release lets developers tinker with state-of-the-art RL for less than a video game Source.

- Market Shakeup: DeepSeek’s rise already erased $1T+ from U.S. tech stocks Source. If $30 models catch on, trillion-dollar AI bets by Google/Meta/OpenAI face existential questions.

- Efficiency Focus: The success of “mixture of experts” architectures (activating only relevant model subsections) hints at leaner futures Source.

Skepticism & Challenges

Not all are convinced. TinyZero’s skills are narrow (math puzzles ≠ general reasoning), and scaling to broader tasks would require far more resources Source. Still, as Pan tweeted: “It just works!” Source—a rallying cry for affordable AI innovation.

The Bottom Line

Berkeley’s $30 model won’t replace GPT-4 tomorrow, but it’s a wake-up call. When a college team can replicate cutting-edge AI for pocket change, the industry’s “bigger is better” mantra starts to crack. As one analyst put it: “The shift has begun” Source. The race is now on to see who can build the most with the least—and that’s a game everyone can afford to play.